Boston Dynamics, along with other companies in the robotics industry, have signed an open letter in which they announce that they will not arm their robots and will not support other companies for this purpose. What is happening to get to this?

Robots armed with explosives in the US

At the end of November 2022, the news broke that the san francisco police could employ robots capable of killing. According to them, “this type of robot will only be used in extremely dangerous situations, which seek to protect innocent lives.” And they added that exceptionally “the machines may be equipped with explosives.”

But the armed robots they had been used before. It is precisely what happened in Dallas in 2016. A sniper had killed five police officers and wounded seven others. In these circumstances, the policemen claimed that they were forced to send a robot, packbottowards the criminal.

The scene: The robot approaches, dragging an electrical buzz that drives its gears. Bewilderment for the sniper. Will he bring the requests that he has negotiated with the police? The robot keeps getting closer. A little bit closer. The criminal doubts whether to shoot him, he just yells nervously at the policemen. He doesn’t understand anything.

The robot gets a little closer, and boom! Their explosive charge C-4 murder the sniper, Micah Johnson. Johnson was black, a US Army Reserve veteran of the Afghanistan War who was reportedly angered by police shootings of black men and stated that he wanted to kill white people, especially police officers. whites. It is believed to be the first time that a US police department used a robot to kill a suspect. In 2018, The police officers were acquitted in court..

Digidog, the New York Police robot dog

In 2020 and 2021 It was the NYPD the one that began to use the robot Digidog, created by Boston Dynamics. They used it to inspect suspicious homes, including an apartment in the Bronx after a kidnapping. They have also been used to negotiate with the kidnapper of a mother and her baby, and even to bring food to some robbers.

In all the interventions, the explanations given by the security forces regarding the intervention of the robot in these scenarios were rather scarce. And that’s really the ethical problem with these machines. Can the use of this type of weapon guarantee respect for citizen rights and unnecessary violence against a criminal? Can each of the robot’s actions be reasoned in detail?

After the episode of the search in the apartment in the Bronx, the congresswoman Alexandria Ocasio-Cortez raised the alarm about employment Digidogs Only in lower-class neighborhoods.

At this point in the text, there are plenty of reasons to understand why Boston Dynamics, together with other companies in the robotics sector –Agility Robotics, ANYbotics, Clearpath, Open Robotics and Unitree Robotics– have signed an open letter in which they announce that “they will not arm their advanced mobility general purpose robots or the software they develop that enables advanced robotics, and they will not support other companies in this purpose.”

eye! The devil is in the small details. In the letter they do not clarify (deliberately?) what they mean by general purpose robots. In addition, they leave the door open for them to be used in surveillance missions or recognition of people, as they are already doing.

The ukrainian war and other previous armed conflicts have also revealed the use of robots, especially drones, in this field.

Both police and military robots fall under the ethical debate on autonomous weapons. In fact, its regulation is currently being debated in the United Nations.

China will not sign any agreement for the use of autonomous weapons

It has been a century since the philosopher and sociologist Antonio Gramsci He said that we must be pessimistic with intelligence and optimistic with will. However, there does not seem to be a willingness to agree. From the outset, China has already announced that does not intend to sign any, and the United States and other major military powers are investing heavily in these types of armed robotic systems. The conflict in Ukraine has been the straw that broke the camel’s back.

This ethical debate has some very slippery bases. Can we consider an automatic missile guidance system, like those that have existed for decades, as an autonomous weapon? Or an antipersonnel mine, which does not distinguish between allies and enemies?

The scientific community at the moment does not even has no clear definition about what is an autonomous weapon.

China claims that its interest in developing artificial intelligence weapons is not related to the automatic killing of people, but to the predictive maintenance, battlefield analysis, autonomous navigation and target recognition. Perhaps in the military world, it doesn’t even make sense to let an autonomous weapon exist that behaves unpredictably and kills without human control.

Drones in the spotlight

In 2021, a multitude of media reported that a drone had completely autonomously killed a victim in the conflict of Libya for the first time in history. However, that drone never existed, according to the manufacturer itself. But that news was barely published.

Resistance to change has always been a lever for human impulses and concerns. The feat of the wright brothers and his flying machine, when a great popular controversy arose about the possible use of these devices in warfare. the novel of H.G. Wells from 1907 the war in the air It is proof of that restlessness.

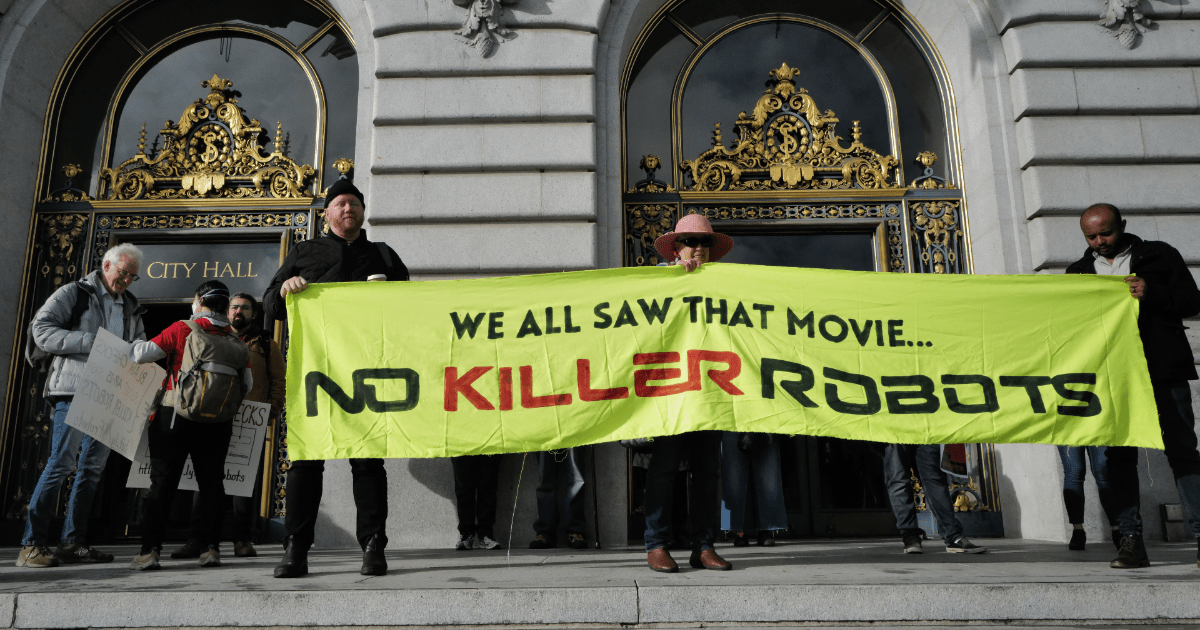

Given all this commotion, on December 7 the city of San Francisco rectified, and will not allow, for the moment, its police to equip robots capable of killing.

David Collingridgeteacher of the Aston Universityin the UK, published in 1980 The Social Control of Technology with the dilemma that bears his name, Collingridge’s dilemma: “When change is easy, the need for it cannot be foreseen. When the need for change is evident, change becomes costly, difficult, and time-consuming.”

Surprisingly, this paradox is very topical today.

![]()

Julian Estevez SanzProfessor and researcher in Robotics and Artificial Intelligence, University of the Basque Country / Euskal Herriko Unibertsitatea

This article was originally published on The Conversation. read the original.